v0.16.0 DeepFloyd IF & ControlNet v1.1

DeepFloyd's IF: The open-sourced Imagen

IF

IF is a pixel-based text-to-image generation model and was released in late April 2023 by DeepFloyd.

The model architecture is strongly inspired by Google's closed-sourced Imagen and a novel state-of-the-art open-source text-to-image model with a high degree of photorealism and language understanding:

Installation

pip install torch --upgrade # diffusers' IF is optimized for torch 2.0

pip install diffusers --upgrade

Accept the License

Before you can use IF, you need to accept its usage conditions. To do so:

- Make sure to have a Hugging Face account and be logged in

- Accept the license on the model card of DeepFloyd/IF-I-XL-v1.0

- Log-in locally

from huggingface_hub import login

login()and enter your Hugging Face Hub access token.

Code example

from diffusers import DiffusionPipeline

from diffusers.utils import pt_to_pil

import torch

# stage 1

stage_1 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

stage_1.enable_model_cpu_offload()

# stage 2

stage_2 = DiffusionPipeline.from_pretrained(

"DeepFloyd/IF-II-L-v1.0", text_encoder=None, variant="fp16", torch_dtype=torch.float16

)

stage_2.enable_model_cpu_offload()

# stage 3

safety_modules = {

"feature_extractor": stage_1.feature_extractor,

"safety_checker": stage_1.safety_checker,

"watermarker": stage_1.watermarker,

}

stage_3 = DiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-x4-upscaler", **safety_modules, torch_dtype=torch.float16

)

stage_3.enable_model_cpu_offload()

prompt = 'a photo of a kangaroo wearing an orange hoodie and blue sunglasses standing in front of the eiffel tower holding a sign that says "very deep learning"'

generator = torch.manual_seed(1)

# text embeds

prompt_embeds, negative_embeds = stage_1.encode_prompt(prompt)

# stage 1

image = stage_1(

prompt_embeds=prompt_embeds, negative_prompt_embeds=negative_embeds, generator=generator, output_type="pt"

).images

pt_to_pil(image)[0].save("./if_stage_I.png")# stage 2

image = stage_2(

image=image,

prompt_embeds=prompt_embeds,

negative_prompt_embeds=negative_embeds,

generator=generator,

output_type="pt",

).images

pt_to_pil(image)[0].save("./if_stage_II.png")# stage 3

image = stage_3(prompt=prompt, image=image, noise_level=100, generator=generator).images

image[0].save("./if_stage_III.png")For more details about speed and memory optimizations, please have a look at the blog or docs below.

Useful links

👉 The official codebase

👉 Blog post

👉 Space Demo

👉 In-detail docs

ControlNet v1.1

Lvmin Zhang has released improved ControlNet checkpoints as well as a couple of new ones.

You can find all 🧨 Diffusers checkpoints here

Please have a look directly at the model cards on how to use the checkpoins:

Improved checkpoints:

| Model Name | Control Image Overview | Control Image Example | Generated Image Example |

|---|---|---|---|

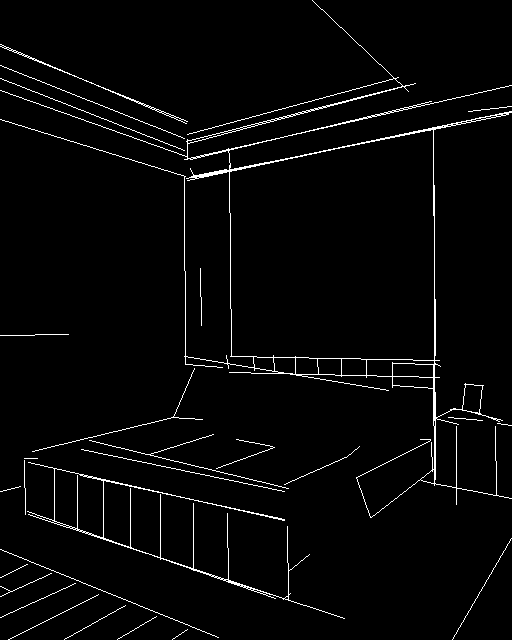

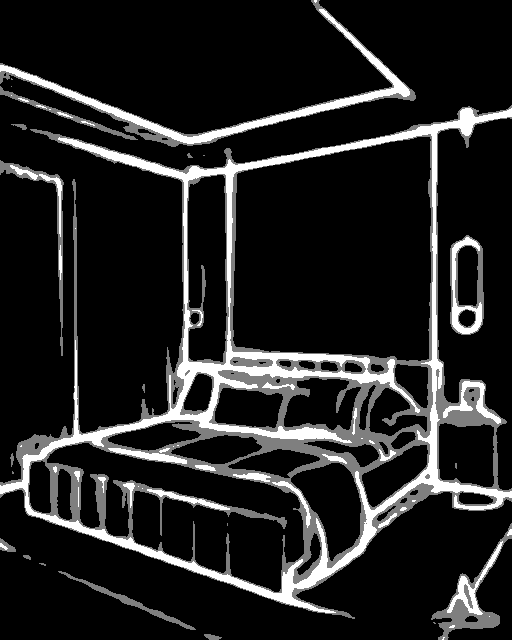

| lllyasviel/control_v11p_sd15_canny Trained with canny edge detection |

A monochrome image with white edges on a black background. |  |

|

| lllyasviel/control_v11p_sd15_mlsd Trained with multi-level line segment detection |

An image with annotated line segments. |  |

|

| lllyasviel/control_v11f1p_sd15_depth Trained with depth estimation |

An image with depth information, usually represented as a grayscale image. |  |

|

| lllyasviel/control_v11p_sd15_normalbae Trained with surface normal estimation |

An image with surface normal information, usually represented as a color-coded image. |  |

|

| lllyasviel/control_v11p_sd15_seg Trained with image segmentation |

An image with segmented regions, usually represented as a color-coded image. |  |

|

| lllyasviel/control_v11p_sd15_lineart Trained with line art generation |

An image with line art, usually black lines on a white background. |  |

|

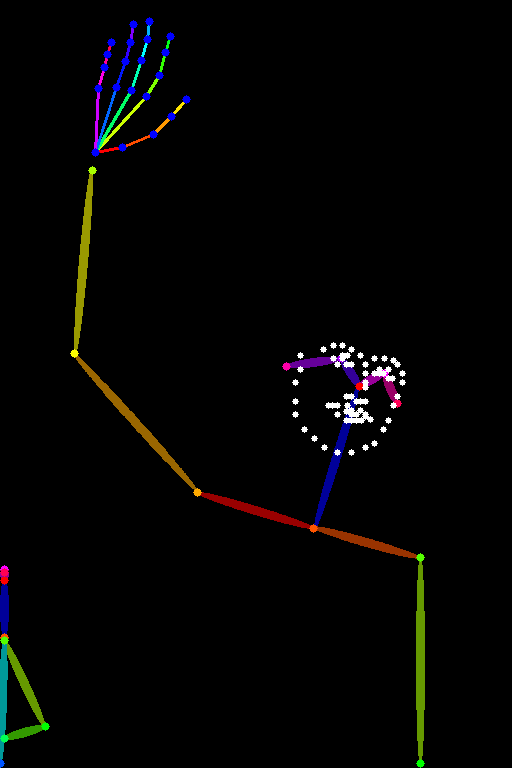

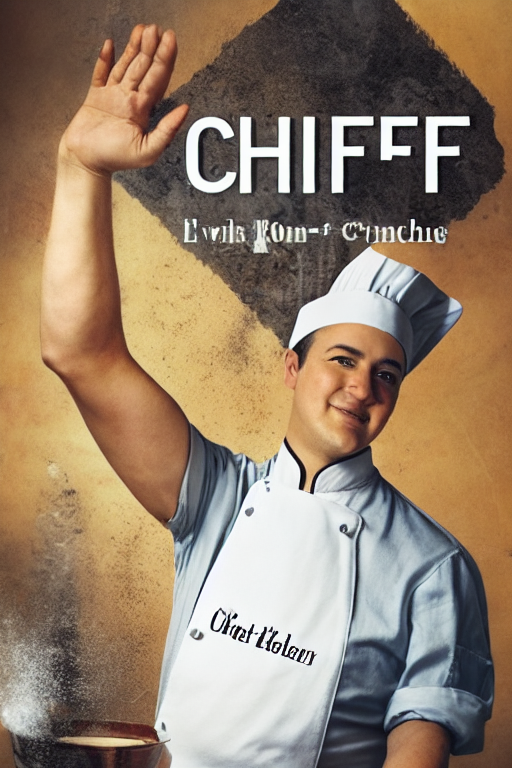

| lllyasviel/control_v11p_sd15_openpose Trained with human pose estimation |

An image with human poses, usually represented as a set of keypoints or skeletons. |  |

|

| lllyasviel/control_v11p_sd15_scribble Trained with scribble-based image generation |

An image with scribbles, usually random or user-drawn strokes. |  |

|

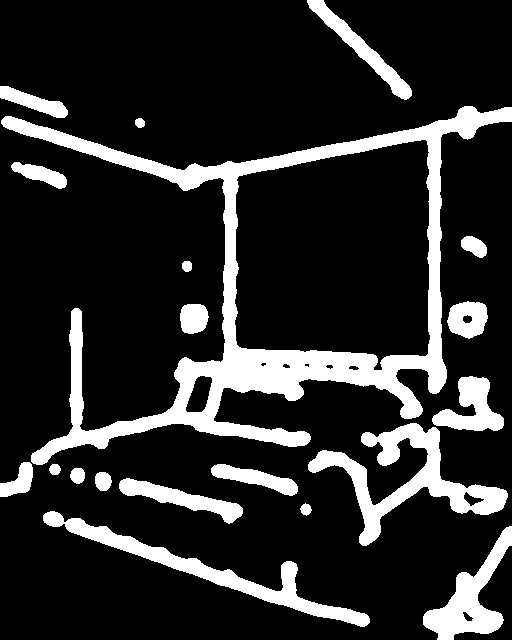

| lllyasviel/control_v11p_sd15_softedge Trained with soft edge image generation |

An image with soft edges, usually to create a more painterly or artistic effect. |  |

|

New checkpoints:

| Model Name | Control Image Overview | Control Image Example | Generated Image Example |

|---|---|---|---|

| lllyasviel/control_v11e_sd15_ip2p Trained with pixel to pixel instruction |

No condition . |  |

|

| lllyasviel/control_v11p_sd15_inpaint Trained with image inpainting |

No condition. |  |

|

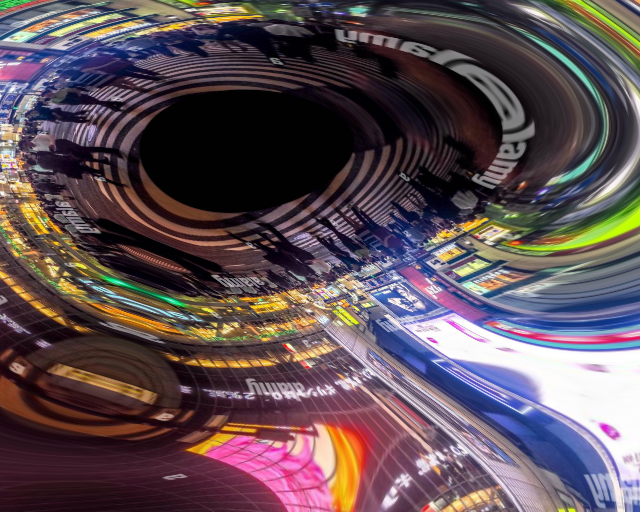

| lllyasviel/control_v11e_sd15_shuffle Trained with image shuffling |

An image with shuffled patches or regions. |  |

|

| lllyasviel/control_v11p_sd15s2_lineart_anime Trained with anime line art generation |

An image with anime-style line art. |  |

|

All commits

- [Tests] Speed up panorama tests by @sayakpaul in #3067

- [Post release] v0.16.0dev by @patrickvonplaten in #3072

- Adds profiling flags, computes train metrics average. by @andsteing in #3053

- [Pipelines] Make sure that None functions are correctly not saved by @patrickvonplaten in #3080

- doc string example remove from_pt by @yiyixuxu in #3083

- [Tests] parallelize by @patrickvonplaten in #3078

- Throw deprecation warning for return_cached_folder by @patrickvonplaten in #3092

- Allow SD attend and excite pipeline to work with any size output images by @jcoffland in #2835

- [docs] Update community pipeline docs by @stevhliu in #2989

- Add to support Guess Mode for StableDiffusionControlnetPipleline by @takuma104 in #2998

- fix default value for attend-and-excite by @yiyixuxu in #3099

- remvoe one line as requested by gc team by @yiyixuxu in #3077

- ddpm custom timesteps by @williamberman in #3007

- Fix breaking change in

pipeline_stable_diffusion_controlnet.pyby @remorses in #3118 - Add global pooling to controlnet by @patrickvonplaten in #3121

- [Bug fix] Fix img2img processor with safety checker by @patrickvonplaten in #3127

- [Bug fix] Make sure correct timesteps are chosen for img2img by @patrickvonplaten in #3128

- Improve deprecation warnings by @patrickvonplaten in #3131

- Fix config deprecation by @patrickvonplaten in #3129

- feat: verfication of multi-gpu support for select examples. by @sayakpaul in #3126

- speed up attend-and-excite fast tests by @yiyixuxu in #3079

- Optimize log_validation in train_controlnet_flax by @cgarciae in #3110

- make style by @patrickvonplaten (direct commit on main)

- Correct textual inversion readme by @patrickvonplaten in #3145

- Add unet act fn to other model components by @williamberman in #3136

- class labels timestep embeddings projection dtype cast by @williamberman in #3137

- [ckpt loader] Allow loading the Inpaint and Img2Img pipelines, while loading a ckpt model by @cmdr2 in #2705

- add from_ckpt method as Mixin by @1lint in #2318

- Add TensorRT SD/txt2img Community Pipeline to diffusers along with TensorRT utils by @asfiyab-nvidia in #2974

- Correct

Transformer2DModel.forwarddocstring by @off99555 in #3074 - Update pipeline_stable_diffusion_inpaint_legacy.py by @hwuebben in #2903

- Modified altdiffusion pipline to support altdiffusion-m18 by @superhero-7 in #2993

- controlnet training resize inputs to multiple of 8 by @williamberman in #3135

- adding custom diffusion training to diffusers examples by @nupurkmr9 in #3031

- Update custom_diffusion.mdx by @mishig25 in #3165

- Added distillation for quantization example on textual inversion. by @XinyuYe-Intel in #2760

- make style by @patrickvonplaten (direct commit on main)

- Merge branch 'main' of https://github.com/huggingface/diffusers by @patrickvonplaten (direct commit on main)

- Update Noise Autocorrelation Loss Function for Pix2PixZero Pipeline by @clarencechen in #2942

- [DreamBooth] add text encoder LoRA support in the DreamBooth training script by @sayakpaul in #3130

- Update Habana Gaudi documentation by @regisss in #3169

- Add model offload to x4 upscaler by @patrickvonplaten in #3187

- [docs] Deterministic algorithms by @stevhliu in #3172

- Update custom_diffusion.mdx to credit the author by @sayakpaul in #3163

- Fix TensorRT community pipeline device set function by @asfiyab-nvidia in #3157

- make

from_flaxwork for controlnet by @yiyixuxu in #3161 - [docs] Clarify training args by @stevhliu in #3146

- Multi Vector Textual Inversion by @patrickvonplaten in #3144

- Add

Karras sigmasto HeunDiscreteScheduler by @youssefadr in #3160 - [AudioLDM] Fix dtype of returned waveform by @sanchit-gandhi in #3189

- Fix bug in train_dreambooth_lora by @crywang in #3183

- [Community Pipelines] Update lpw_stable_diffusion pipeline by @SkyTNT in #3197

- Make sure VAE attention works with Torch 2_0 by @patrickvonplaten in #3200

- Revert "[Community Pipelines] Update lpw_stable_diffusion pipeline" by @williamberman in #3201

- [Bug fix] Fix batch size attention head size mismatch by @patrickvonplaten in #3214

- fix mixed precision training on train_dreambooth_inpaint_lora by @themrzmaster in #3138

- adding enable_vae_tiling and disable_vae_tiling functions by @init-22 in #3225

- Add ControlNet v1.1 docs by @patrickvonplaten in #3226

- Fix issue in maybe_convert_prompt by @pdoane in #3188

- Sync cache version check from transformers by @ychfan in #3179

- Fix docs text inversion by @patrickvonplaten in #3166

- add model by @patrickvonplaten in #3230

- Allow return pt x4 by @patrickvonplaten in #3236

- Allow fp16 attn for x4 upscaler by @patrickvonplaten in #3239

- fix fast test by @patrickvonplaten in #3241

- Adds a document on token merging by @sayakpaul in #3208

- [AudioLDM] Update docs to use updated ckpt by @sanchit-gandhi in #3240

- Release: v0.16.0 by @patrickvonplaten (direct commit on main)

Significant community contributions

The following contributors have made significant changes to the library over the last release:

- @1lint

- add from_ckpt method as Mixin (#2318)

- @asfiyab-nvidia

- @nupurkmr9

- adding custom diffusion training to diffusers examples (#3031)

- @XinyuYe-Intel

- Added distillation for quantization example on textual inversion. (#2760)

- @SkyTNT

- [Community Pipelines] Update lpw_stable_diffusion pipeline (#3197)