Table of Contents

- This repo is no longer active, sorry guys, I no longer want to keep working on this :)

I've seen multiple projects out there in GitHub, that are crawlers for the deep web, but most of them did not meet my standards of OSINT on the deep web. So I decided to create my own deep web OSINT tool.

This tool serves as a reminder that the best practices of OPSEC should always be followed in the deep web.

The author of this project is not responsible for any possible harm caused by the usage of this tool.

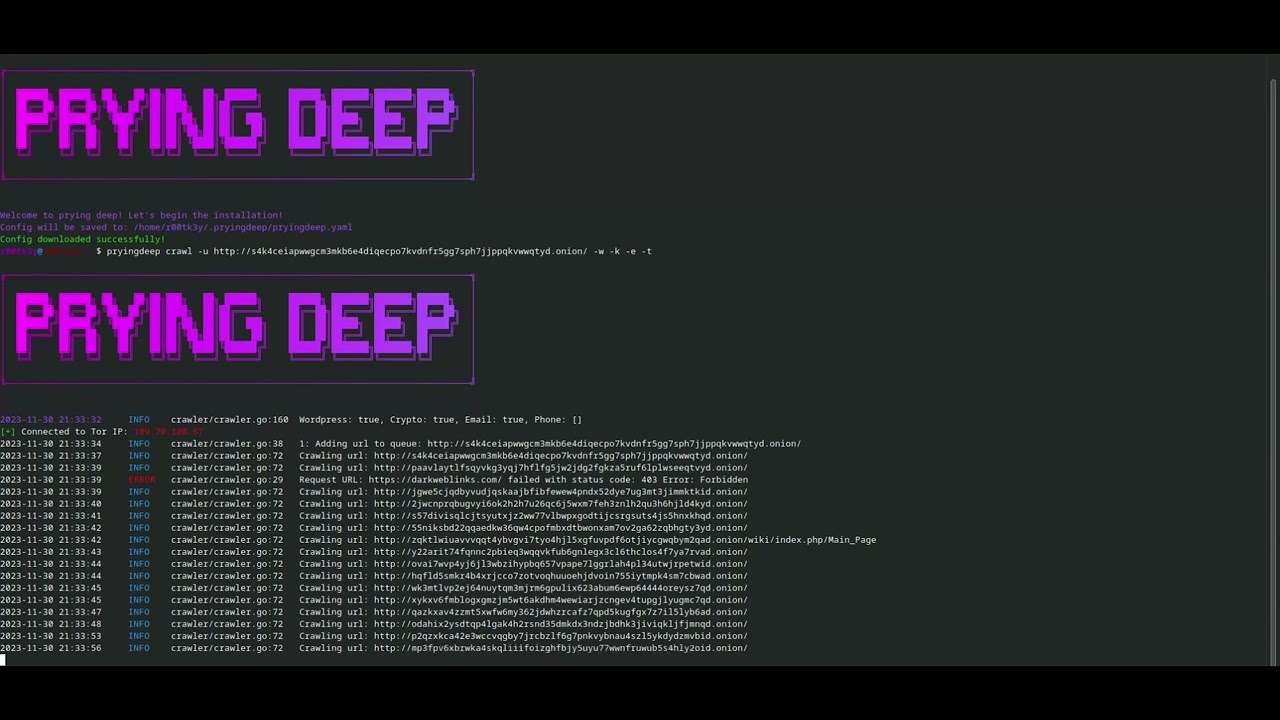

Prying Deep crawls dark/clear net platforms and extracts as much intelligence as possible effectively.

Before you can use our OSINT tool, please ensure you have the following dependencies installed:

-

Docker: (optional)

- You can download and install Docker by following the official installation instructions for your specific operating system:

- Docker Installation Guide.

-

Go: (required)

-

PostgresSQL: (required if you don't use docker)

- Make sure your

pryingdeep.yamlenvironment variables match the environment indocker-compose.yaml - PostgreSQL Installation

- Make sure your

- Install the binary via:

go install -v github.com/iudicium/pryingdeep/cmd/pryingdeep@latest- Run the following command:

pryingdeep install- Adjust the values inside the config folder to your needs.

-

Clone the repo:

git clone https://github.com/iudicium/pryingdeep.git

-

Adjust the values in the .yaml configuration either through flags or manually.

Database,logger,torall require manual configuration.

You will need to read Colly Docs. Also, refer to Config Explanation

- Build the binary via:

go build-> inside thecmd/pryingdeepdirectorygo build cmd/pryingdeep/pryingdeep.go-> root directory, binary will also be there.

To start run pryingdeep inside a docker container use this command:

docker-compose upRead more each parameter here: config

Read more about building and running our tor container here: Tor

Pryingdeep specializes in collecting information about dark-web/clearnet websites.

This tool was specifically built to extract as much information as possible from a .onion website

Usage:

pryingdeep [command]

Available Commands:

completion Generate the autocompletion script for the specified shell

crawl Start the crawling process

export Export the collected data into a file.

help Help about any command

install Installation of config files

Flags:

-c, --config string Path to the .yaml configuration. (default "pryingdeep.yaml")

-h, --help help for pryingdeep

-z, --save-config Save chosen options to your .yaml configuration

-s, --silent -s to disable logging and run silently

- Add a user to the "tor" docker container so that it doesn't run as root

- Acquire a shodan api key for testing the favicon module

- Think of a way to acquire IP address of the server

- Implement scan command

- Implement file identification and search

- Move tests into their corresponding packages

- Fork the Project

- Checkout the dev branch (

git checkout dev) - Add proper documentation to your code.

- Use

goimportsto lint your code - Submit a pull requests and add a detailed description of what has been changed.

Distributed under the GPL-3.0 license. See LICENSE for more information.

If you have found this repository useful and feel generous, you can donate some Monero (XMR) to the following address:

48bEkvkzP3W4SGKSJAkWx2V8s4axCKwpDFf7ZmwBawg5DBSq2imbcZVKNzMriukuPqjCyf2BSax1D3AktiUq5vWk1satWJt

Thank you!